I worked on Rose City Games’ recently released VR title, LOVESICK, as an environment artist. I created background models, environments, interactive object models, texturing, and occasional VFX.

More about the game HERE.

Trailer is HERE.

This is an passion project which is still in development. It’s a mobile AR app which visualizes the huge sheets of ice which covered the landscape in the ice ages. It’s intended for use at scenic overlooks amid the dramatic landscapes of the Pacific NW.

The app is build in Unity and uses Mapbox to load terrain data at the user’s location, which the user then orients to match the real-world landscape. Then a layer of ice is placed at a specified height, where the digital terrain masks it out, creating an overlay on the real world.

The technical setup, where the pre-loaded scan is overlaid on the real world, was based on the system I put together for the Escape Room Ghost — it’s an AR experience tailored for a particular place. In this case, that place is a local scenic overlook.

This app is a work in progress, but here’s a demonstration of its current state.

More info on Mapbox toolset can be found at mapbox.com.

These gifs came from a conversation with my sibling Ansel, who works with the LIGO collaboration and also teaches undergraduate physics. They were dissatisfied with the diagrams that were generally available when teaching students about spinning neutron stars that generate gravitational waves. The existing diagram they had been using illustrated several pieces of important information:

But the diagram also had some shortcomings:

It also didn’t portray some potentially helpful information that was shown in other diagrams, like the star’s magnetic field and the beams of light that they emit from their poles, which also don’t necessarily align with the spin axis.

So I took this as challenge for my animation skills.

My goals here were:

Once I had the initial layout, I also created some alternate versions of the gifs with different mountain placement, just to show another possible configuration.

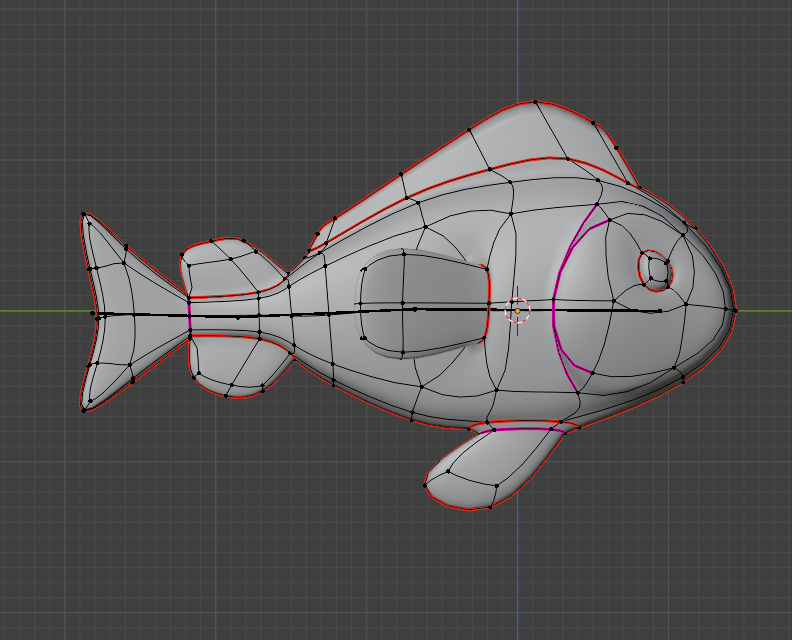

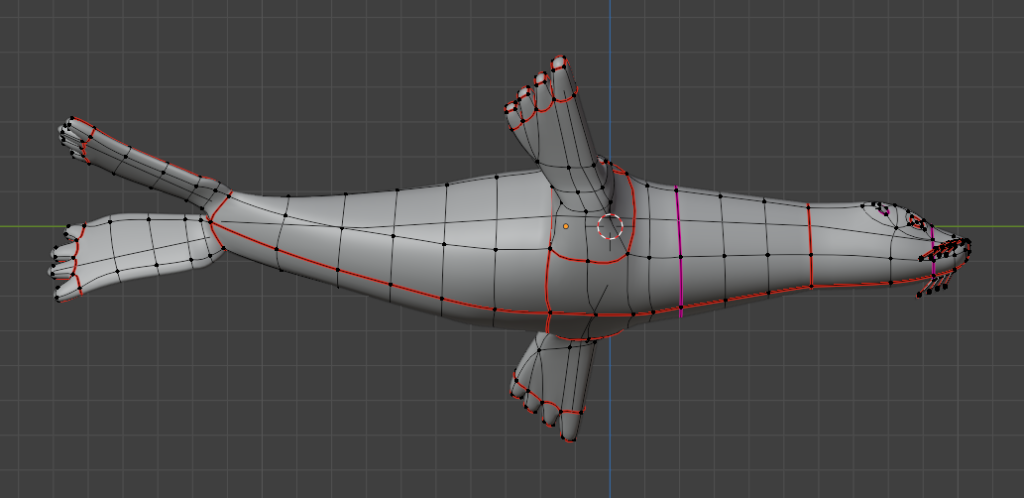

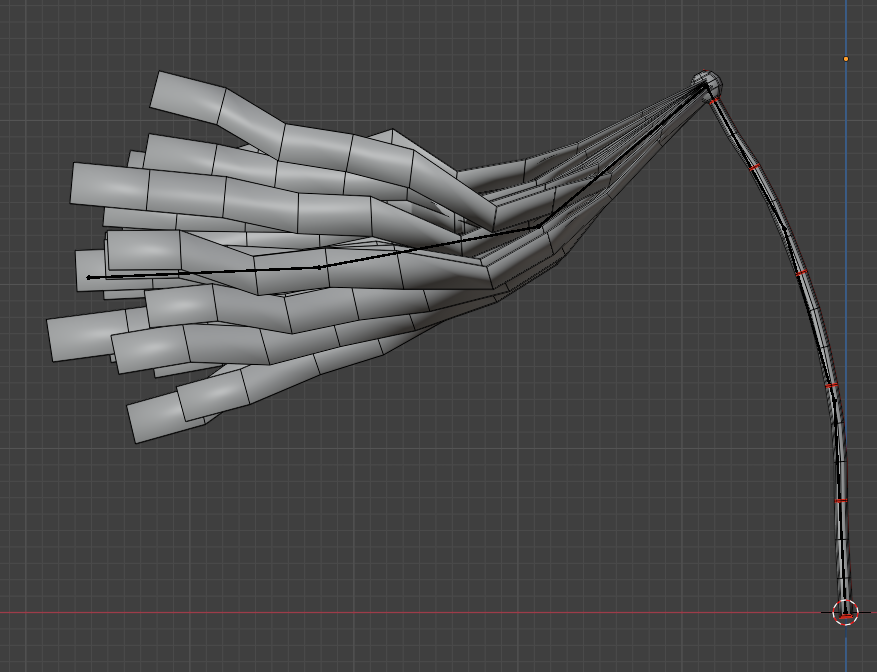

My role: Modeling, rigging, and texturing

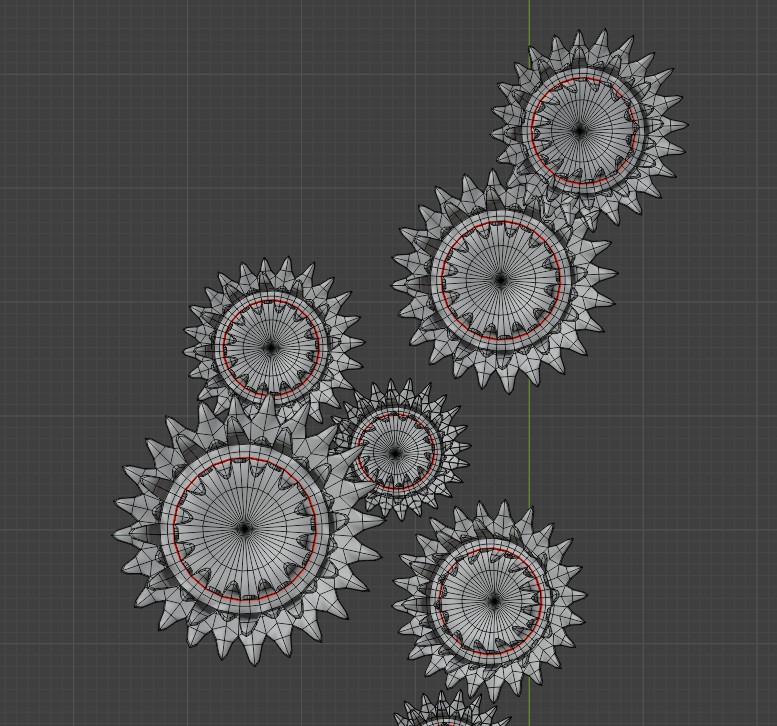

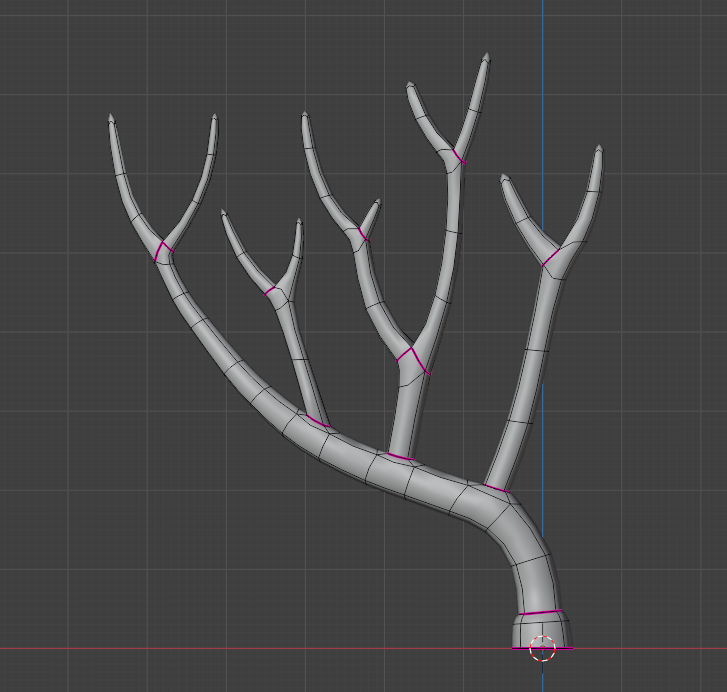

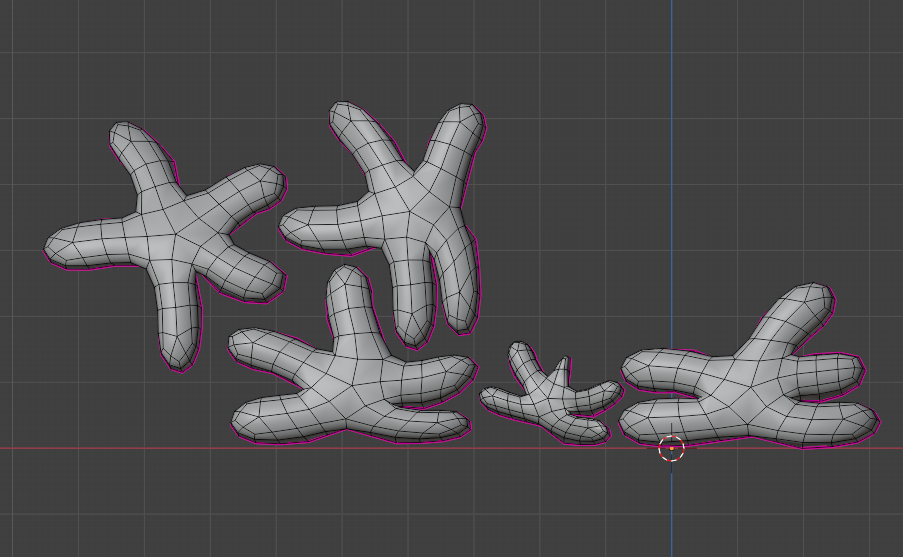

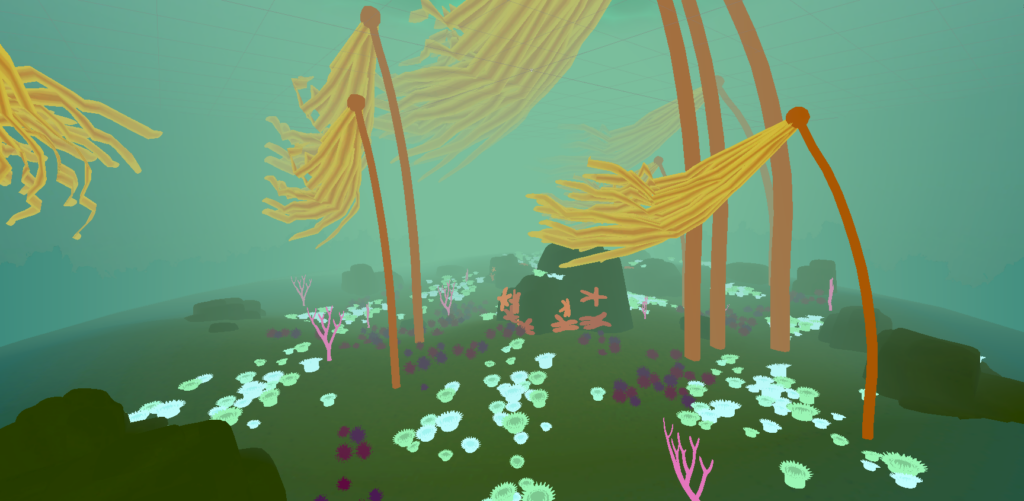

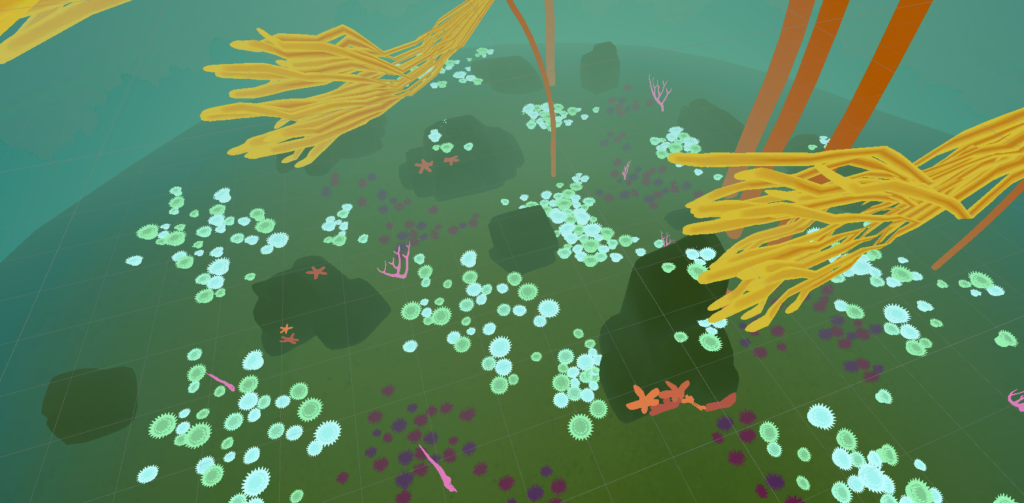

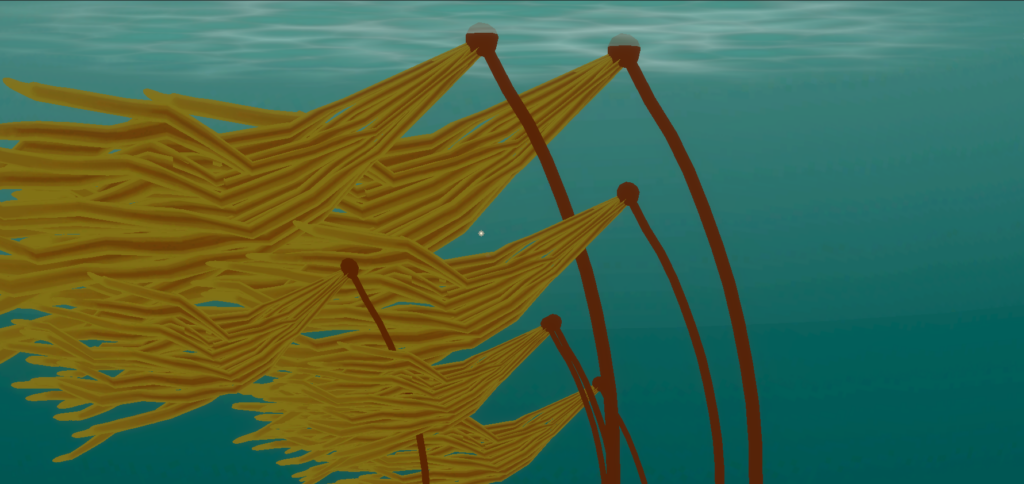

Some background graphics for the game Oscillarium.

The goal was for the environment to be calm, meditative, and welcoming — a bit like watching an aquarium. The models needed to be low-poly, stylized, and painterly.

Made in Blender, for Unity.

A prototype of an educational VR experience which I developed for 9iFX. Built for the Vive, the experience allows the user to observe and interact with a model of a Saturn rocket engine in various ways:

Since the models here were originally given to me as CAD files, they required some conversion and optimization to work in Unity. I reduced the polygon count pretty significantly in Blender while maintaining their fidelity.

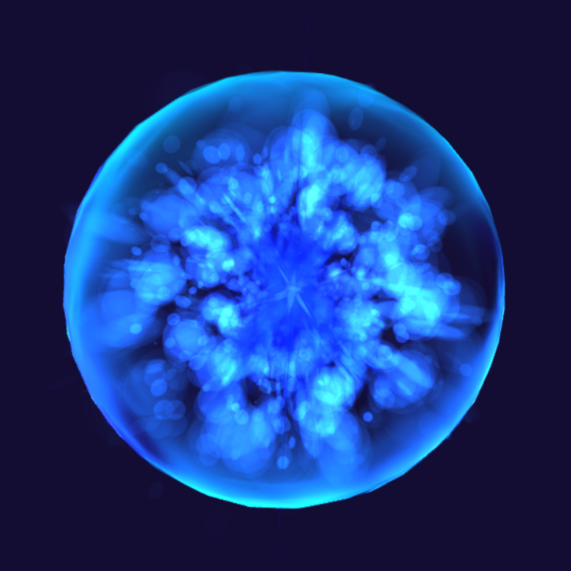

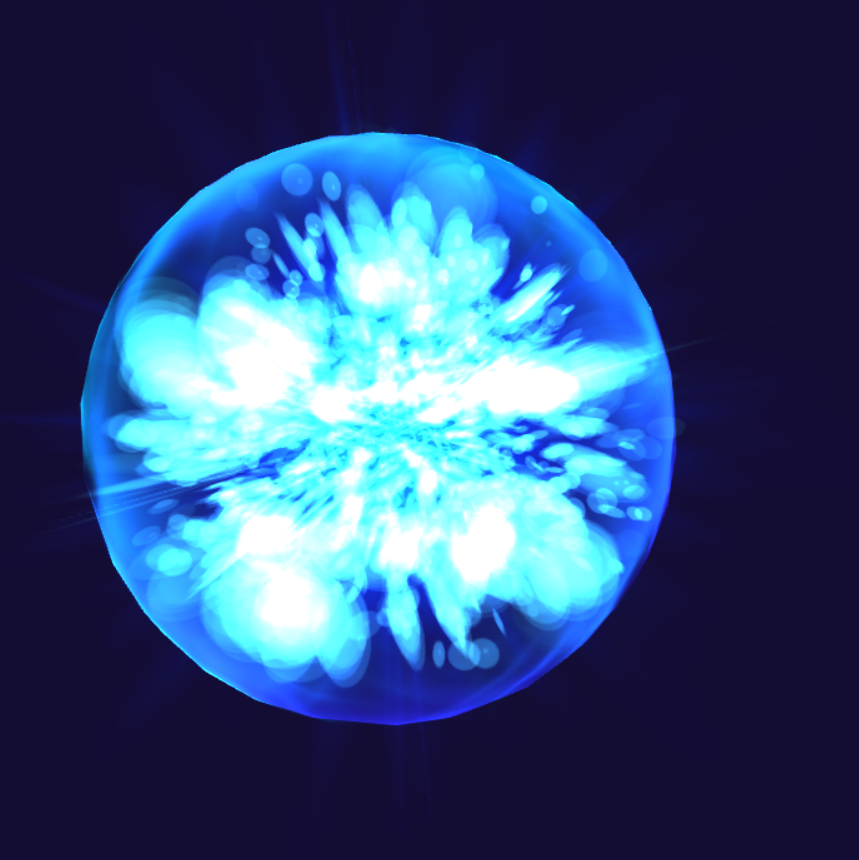

Like the neutron star gifs, this was a personal project based on a conversation with my sibling, who is a physicist. They pointed out that the usual representation of gravitational waves that appears in pop sci publications illustrates how gravitational waves move from their source, but doesn’t clearly show what gravitational waves actually are: distortions in spacetime.

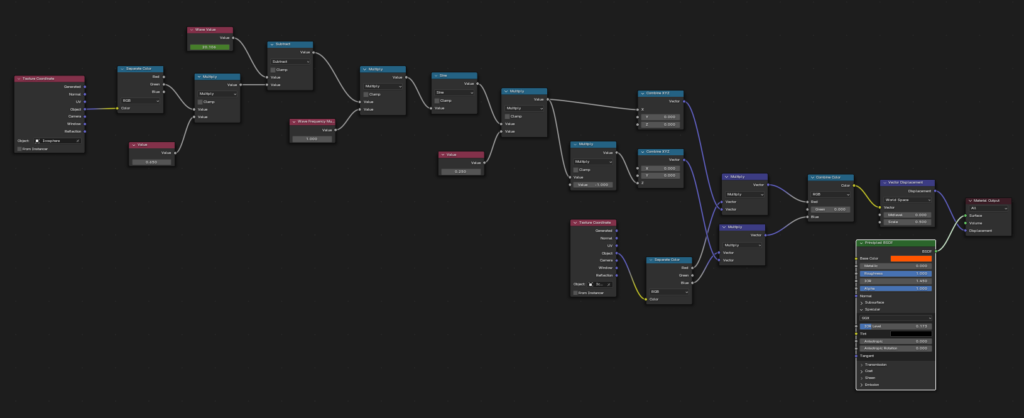

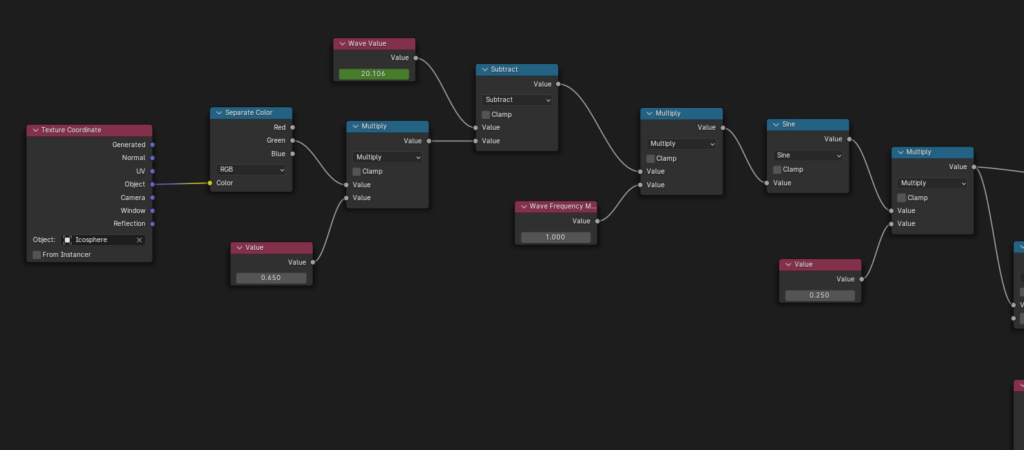

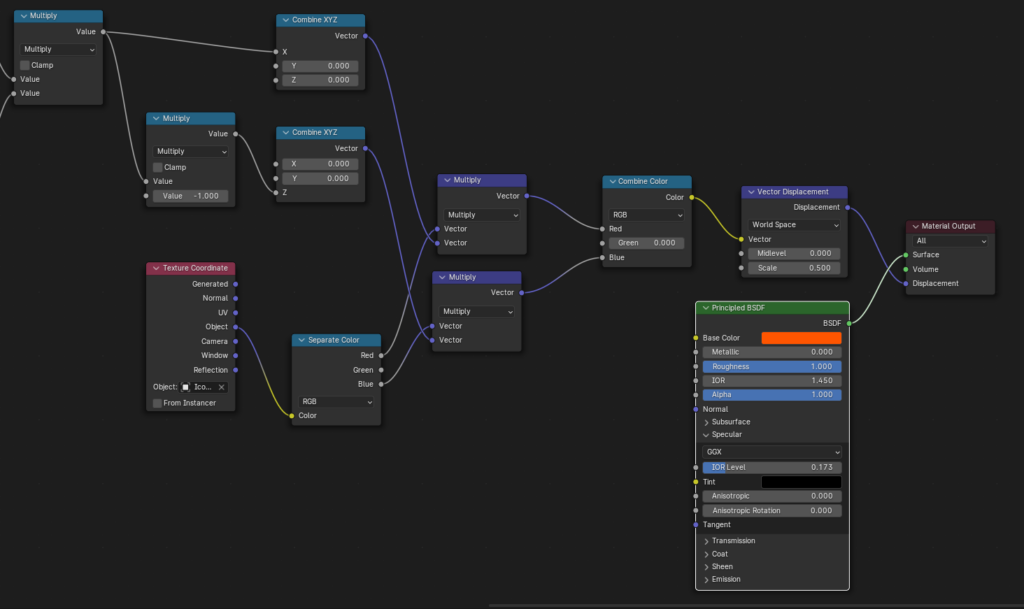

Based on what they told me, I constructed a shader in Blender to visualize the 3-dimensional deformations that affect an object when a gravitational wave passes through it.

I made the sphere gifs just to provide a variety of visualizations of how the distortions of a gravitational wave effect spacetime and the objects in it. This grid provides a little more of a visual breakdown.

(These animations are made at arbitrary scales, and do not show the actual amplitude, speed, or frequency of the waves. The shader is only meant to provide a visual of the kind of distortion the waves create.)

The next set of gifs represents the LIGO Hanford gravitational wave observatory, which measures spacetime distortions in the length of two huge “beam tubes” in the desert of Washington state. The beam tubes are built at 90 degrees to each other so that researchers can pinpoint where gravitational waves are coming from based on the differences in how the tubes are distorted. These gifs aim to provide a simple visual on what those differences look like.

My Role: Development, animation, and effects

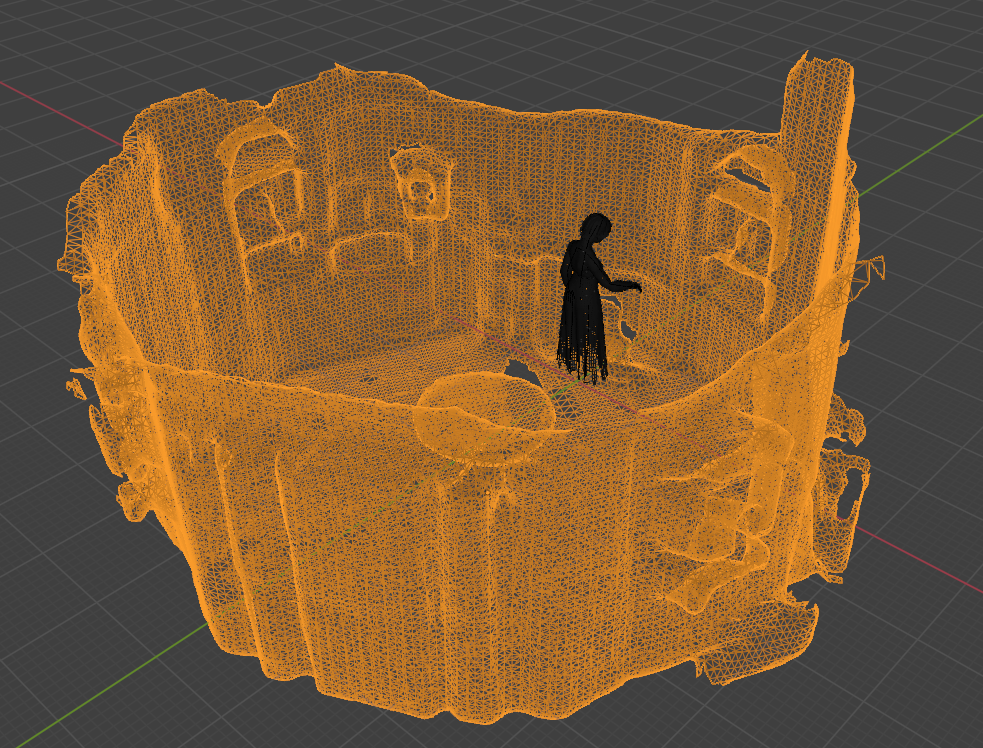

The escape room ghost project is a space-based mobile AR app. It’s an interactive experience that allows the user to see a ghostly figure appear and move around the room. The ghost interacts with objects in the room and provides hints to the escape room puzzles.

The app is set up before each use by matching a pre-existing 3D scan of the room with the room itself. This is done by looking around the room space and allowing the app to detect planes, so that the AR elements can remain steady and and locked into place. The user can rotate and adjust the room scan mesh to match the room. The scan mesh then becomes invisible, but will still occlude the ghost and other AR elements, allowing them to move behind or through objects in the room.

The app then detects the presence of cards that the user finds and scans. Each of the cards triggers a unique animation in which the ghost appears in a puff of smoke, moves around the room to give the player a hint about the puzzle they are trying to solve, and then vanishes into smoke again.

The AR app did not end up being used, but some of the effects and animations can still be viewed in the escape room experience. The technical setup of the project did inspire one of my other ongoing projects though.

Created by Sprocketship for Mental Trap Escape Room Games.

I created these animated gifs just to explore short-format looped animations. All the models and animations were created in Blender. Some were inspired by reading Project Drawdown’s list of existing climate crisis solutions, others were just representations of things I saw around me. They’re intended to be compositionally bold, visually appealing, and maybe a little bit meditative.

As a student in Clackamas Community College’s first Hololens Development course, I was part of the team tasked with creating an app to help students in CCC’s automotive courses visualize the workings of the gearbox in an automatic transmission. The interactions of the gears and the power flow of the device is notoriously hard to explain using 2D diagrams, so the transmission was chosen in part to showcase the power of AR as an educational tool. The app was started by the class as a whole and completed by a small group of students, myself included, after the course was over.

The Transmission app in action.

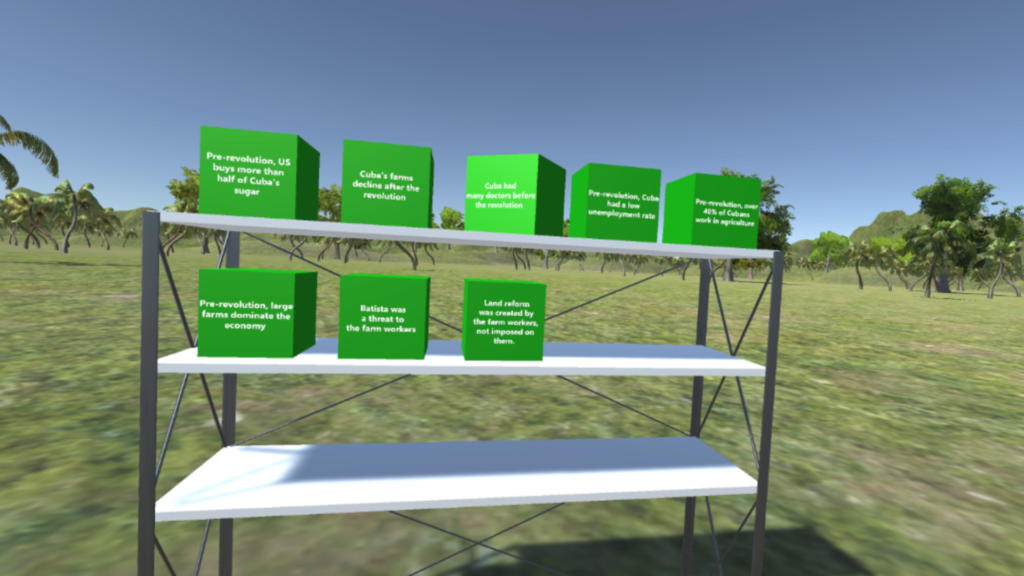

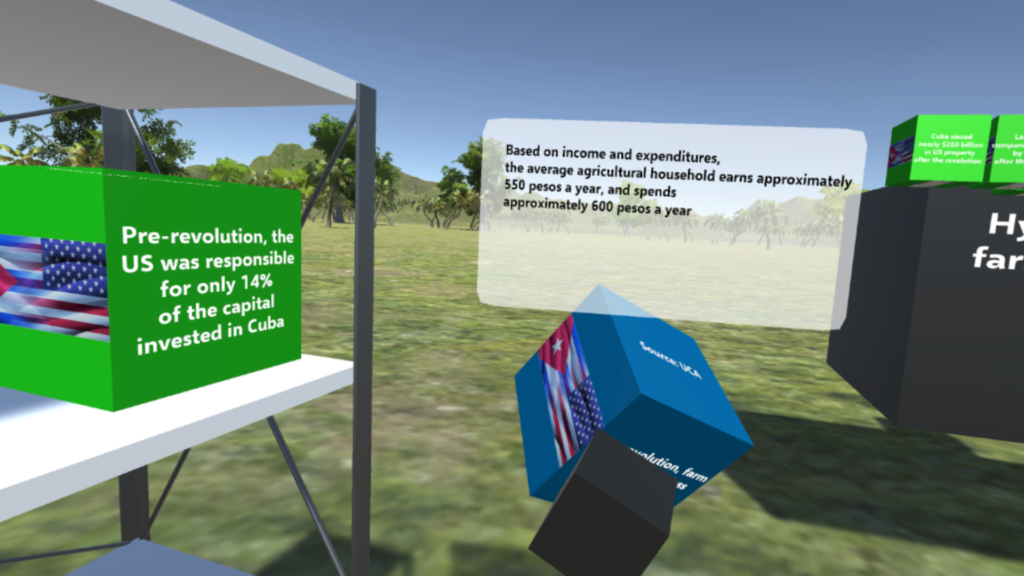

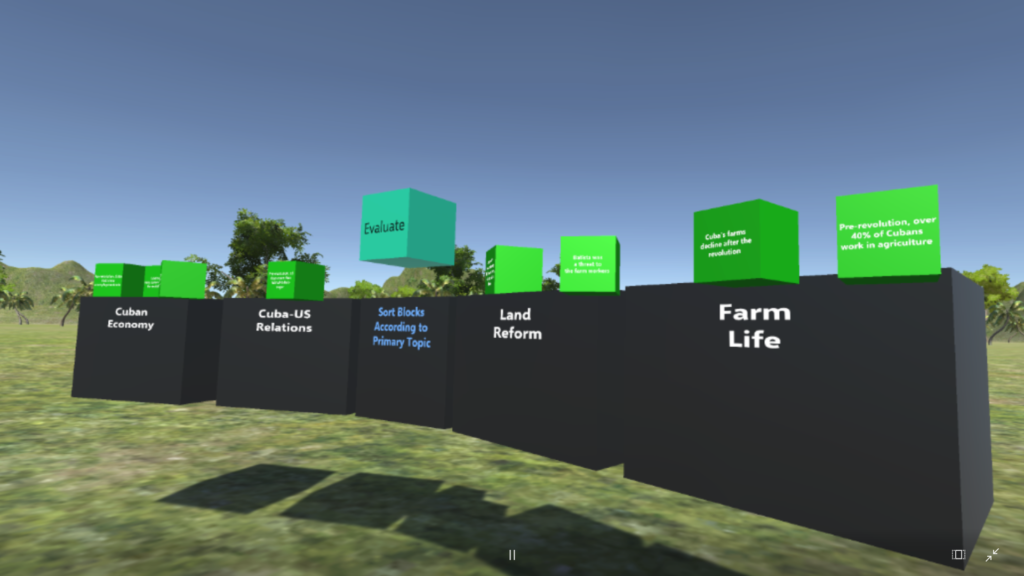

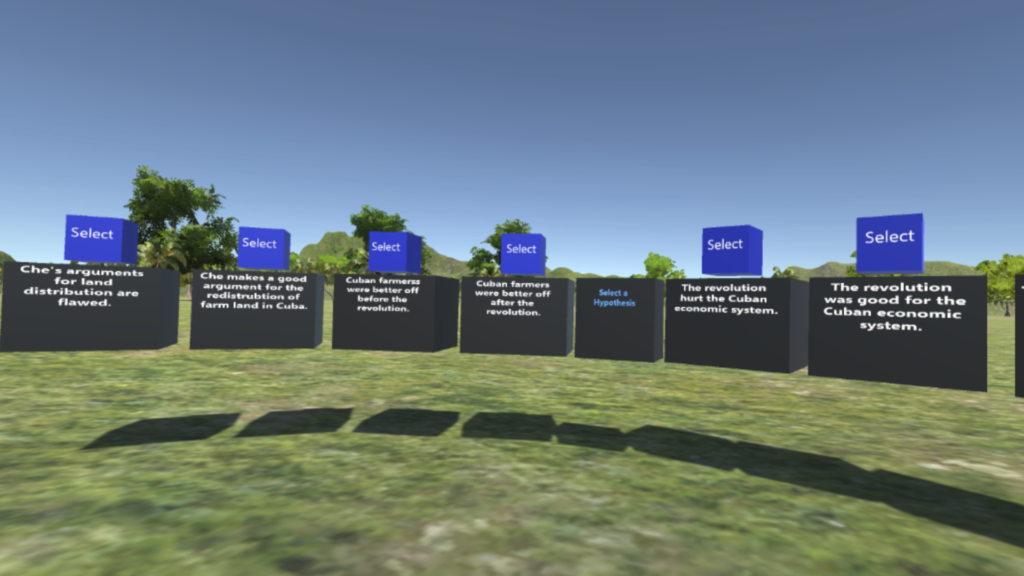

The Thinking Machine project is a VR experience by Shovels and Whiskey. It’s an educational tool that allows users to interact with information in the form of objects: selecting, moving, and categorizing customizable data “blocks”.

The experience is built in Unity using Windows Mixer Reality.

This project also has a testing version which was built to explore various interaction modes. This included different methods of transporting around the scene, and of interacting with objects via the controllers.

Different combinations were then created and tested to see which ones were most intuitive to new or inexperienced VR users.

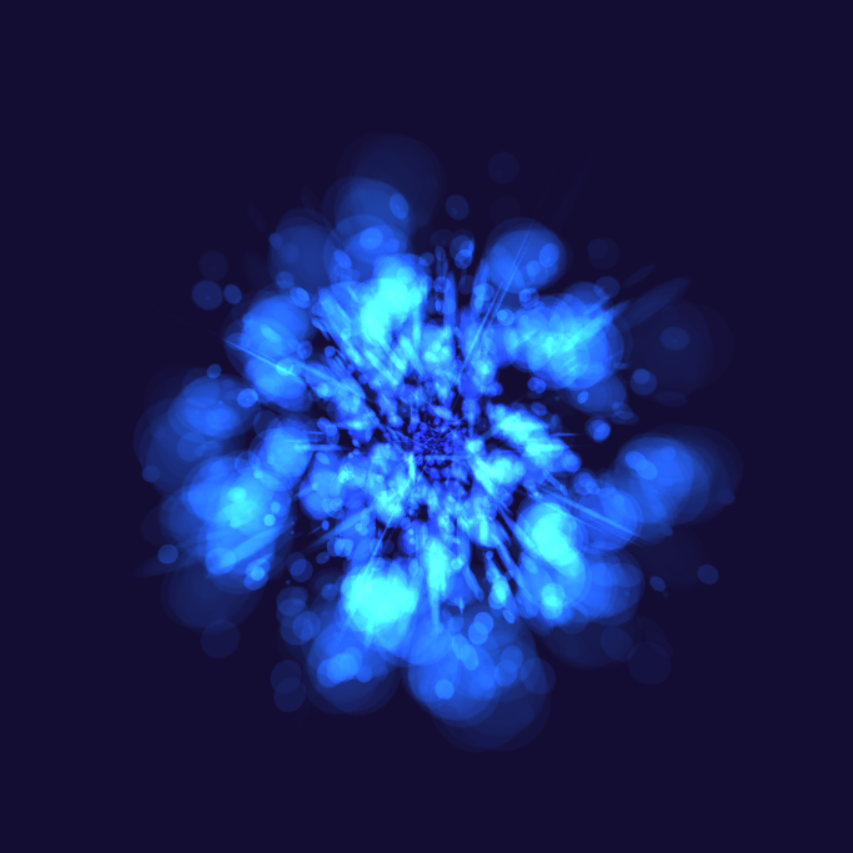

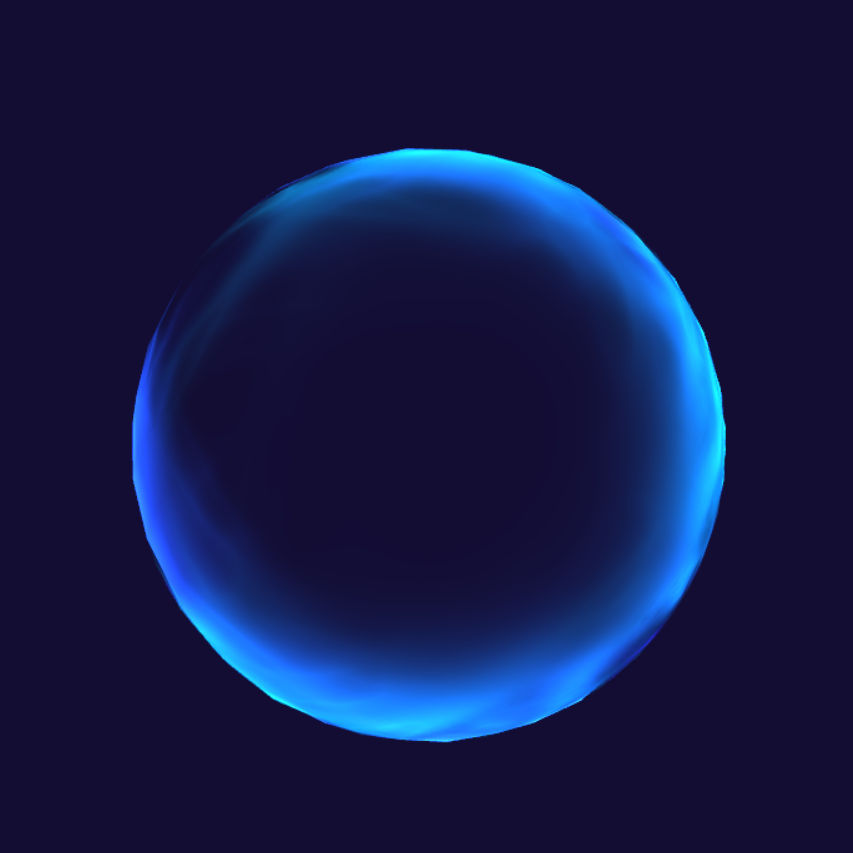

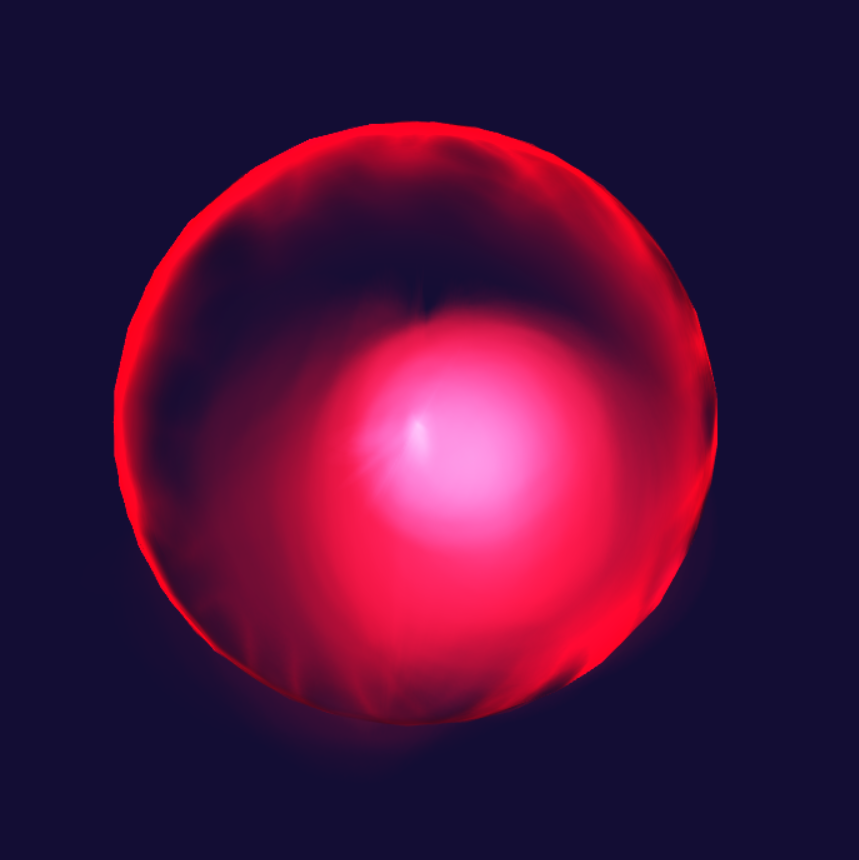

The Wisps are enemies in the fantastical VR fencing game Willowisp VR. They are real-time generative effects built in Unity, using a combination of particle systems and semi-transparent textures to create fire and magic effects.

Each Wisp is made up of simple particle systems that use partially transparent, additive textures to appear more complex. The limited number of particles keeps the effect efficient and the variety of textures makes each Wisp recognizable and visually engaging.

An example of particle system layers (left) and the texture they use:

The edge layer’s opacity is limited to the outside rim, which gives the Wisp a clearly defined spherical edge from any angle. This matches with the object’s collider in-game and shows the player clearly where their target is.

If you want to know more about my process for particle effects, also check out this talk I did for the Portland Indie Game Squad on introductory Unity particles.

Green Loop VR for Shovels + Whiskey, with the City of Portland Bureau of Planning and Sustainability, is a virtual reality visualization of potential sites of development for the city’s Green Loop walking path.

The experience invites the user to explore familiar spaces of Portland, both as they are now and as they could be as part of the Green Loop path. It’s an architectural diagram brought to life, where the user moves through points of interest at their own pace and can view the potential changes to the scene in each location.

Made to engage the public in the city’s decision-making process, Green Loop focuses on ease-of-use, clarity, and education through visual immersion.

You can find more info on this project here.